How do physical thinkers model the physical?

How do mindreaders’ belief-tracking systems model minds?

The notion of model complements that of system.

The idea is going to be that different belief-tracking systems rely on different models of the mental.

But I'm getting ahead.

Let me start with a simple question.

How do mindreaders model minds?

Or, if you accept the conjecture, we should really be asking about how different

belief-tracking systems model minds.

This question needs explanation.

Let me explain the question by comparing an anlogous question about

the physical ...

How do physical thinkers model the physical?

Focus just on the physical case first.

The question is, How do physical thinkers model the physical?

To say that a certain group of subjects can represent physical properties

like weight and momentum leaves open the question

of how they represent those things.

In asking how the subjects, infants say, weight or momentum, we are aiming to

understand these things as infants understand them;

we are aiming to see them as infants see them.

(NB: I'm going to focus on human adults!)

How can we do this?

We need a couple of things [STEPS: (1) theories; (2) signature limits; ]

Impetus vs Newtonian

The first thing we need is theories of the physical or mental.

It is a familiar idea,

from the history of science,

that there are multiple coherent \textbf{theories} of the physical:

impetus and Newtonian mechancs, for example.

The impetus theory says that moving objects have something, impetus, that they gradually loose.

When they loose their impetus they stop moving.

If you push them you impart impetus to them, and that is why they move.

With Newtonian mechanics this is not the case; there is no impetus.

Theories specify models.

A theory isn't a model, and to say that someone relies on a model is not

to say that they necessarily know any theory.

But the theory describes a way the universe could be,

and so specifies one way that a process could model it as being.

The impetus theory isn't right but it is (broadly) coherent; it describes a way the world

could be. This is why it specifies a model.

2. flexibility/efficiency trade-offs

The second thing we need

(in order to answer the question about how humans model the physical)

is to understand

why different humans use different models of the physical.

What makes it useful to have different models of the physical for, say

putting up a garden fence or landing a robot on a comet,

is that some models allow you to get answers quickly without too much effort

whereas other models, although harder to use, will provide accurate answers even

in situations far from the mundane.

So because we're interested in actual thinkers, we are looking for pairs (or sets)

of models that allow different trade-offs between efficiency and flexibiliy.

It's for just this reason that the contrast between impetus and Newtonian models

is interesting ...

impetus vs friction+air-resistance+...

Whereas a Newtonian theory requires computing several independent

forces such as friction and air resistance, which are both distinct

from the forces imparted in launching an object,

impetus mechanics

in effect rolls these all into a single thing, the object’s impetus.

relational vs propositional

codifiable vs uncodifiable

A signature limit of a model is a set of predictions derivable from

the model which are incorrect, and which are not predictions of other

models under consideration.

In limited but common range of cases, impetus and Newtonian mechanics

coincide.

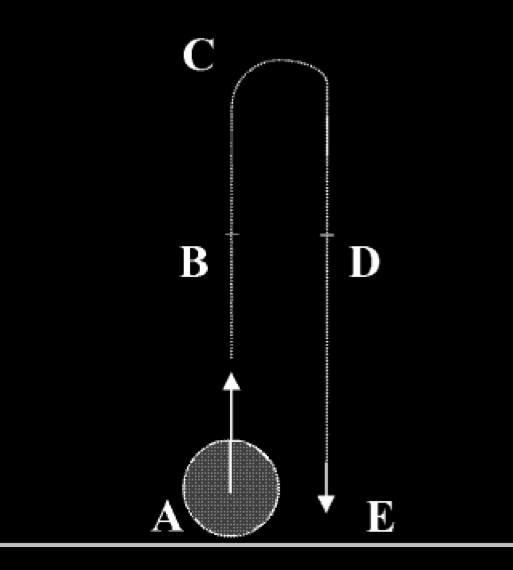

However, the two theories make different predictions about the acceleration of falling objects,

and of ascending objects (those launched vertically, in the manner of a rocket).

Consider ascending objects. We're fixing density and shape and considering how the size

of objects changes things.

According to Newtonian mechanics, if we ignore air resistance, then size makes no difference to

accelleration. If we include air resistance, larger objects accellerate faster (because of the

difference in ratio of mass to surface).

By contrast, according to an impetus principle:

‘More massive objects accelerate at a slower rate. An object’s initial impetus continually

dissipates because it is overcome by the effect of gravity. The more massive the ascending object,

the more gravity counteracts its impetus.’ \citep[p.\ 445]{kozhevnikov:2001_impetus}

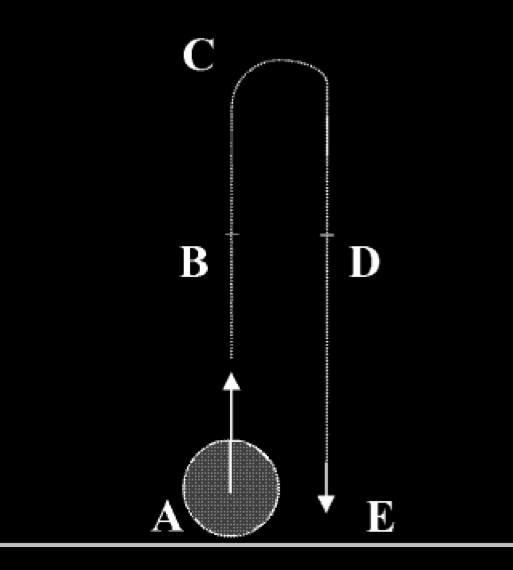

vertical launch

So vertical launch is a signature limit of impetus models of the

physical.

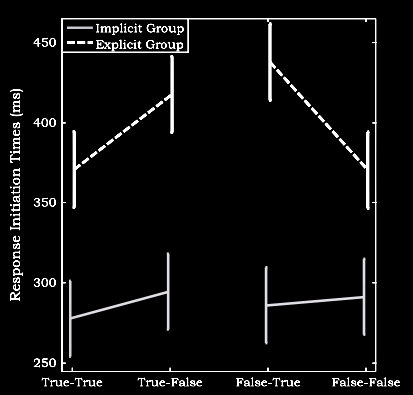

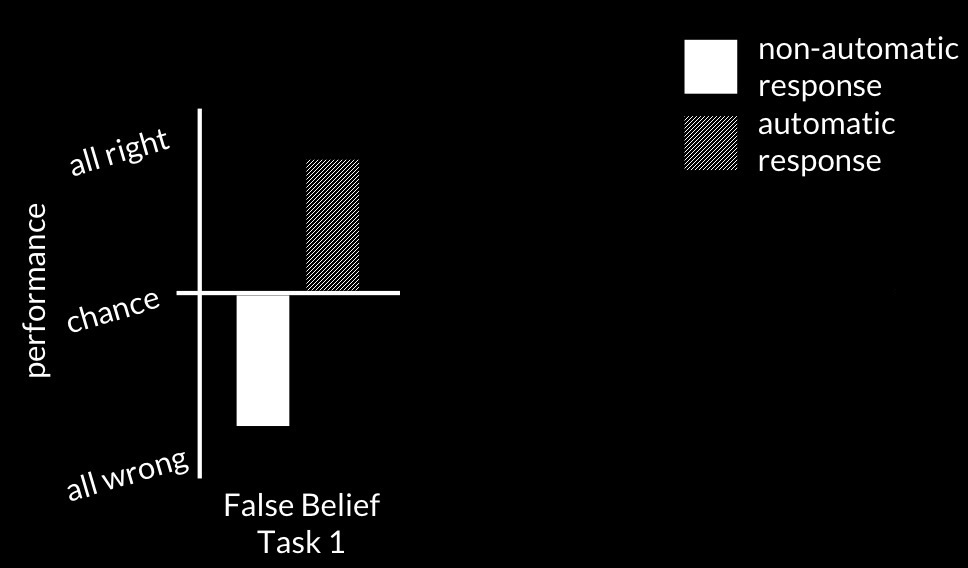

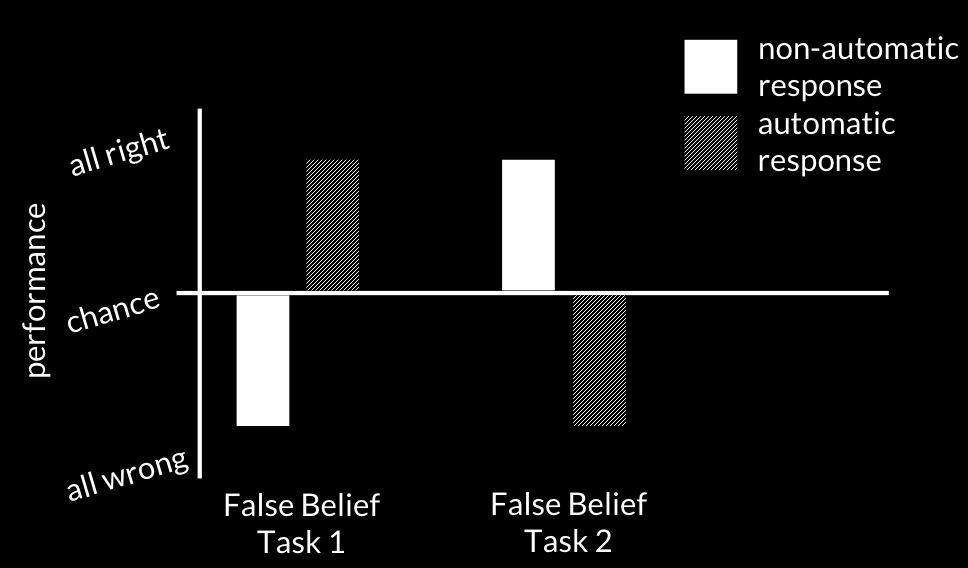

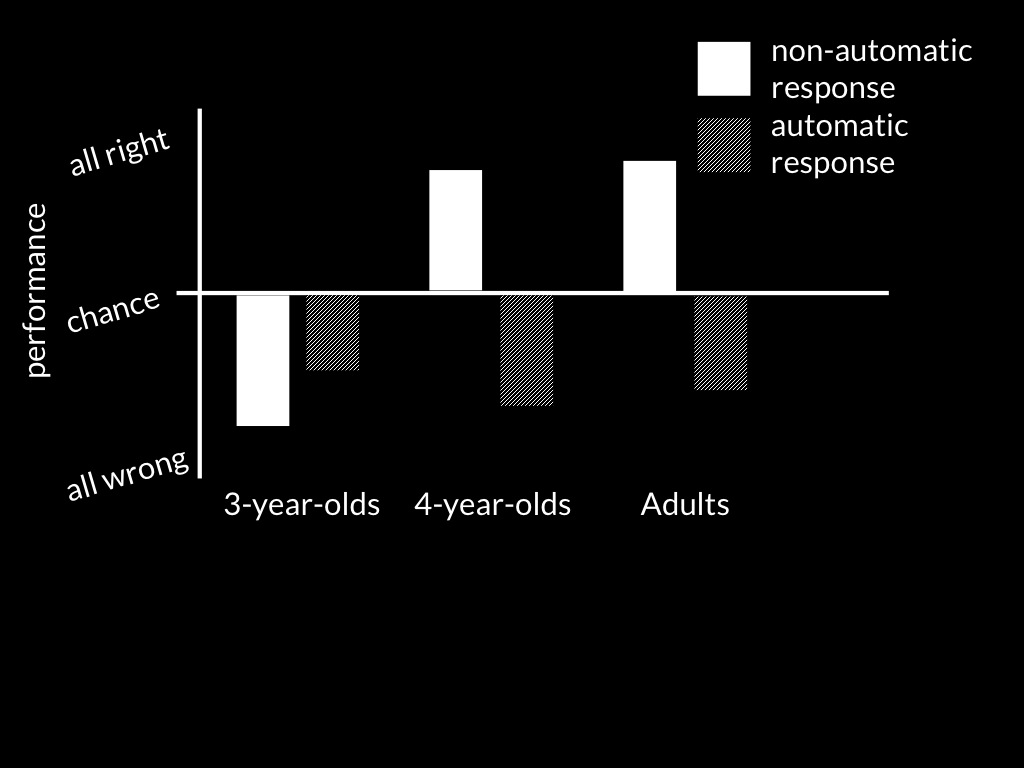

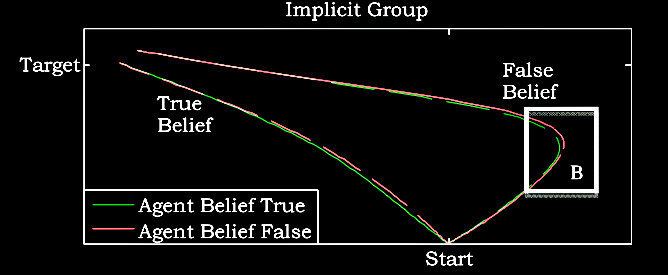

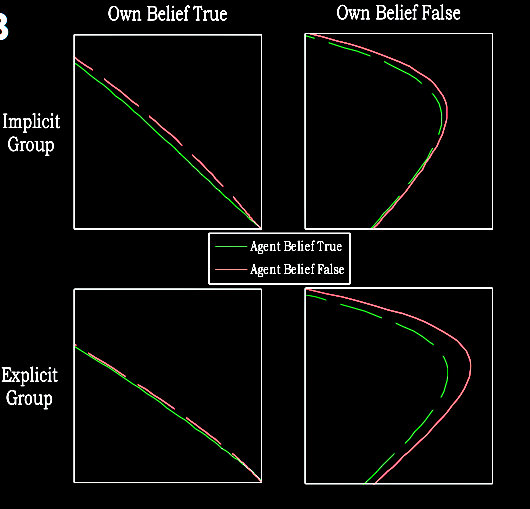

Interestingly, there is evidence that some automatic responses

show this signature limit of impetus models of the physical.

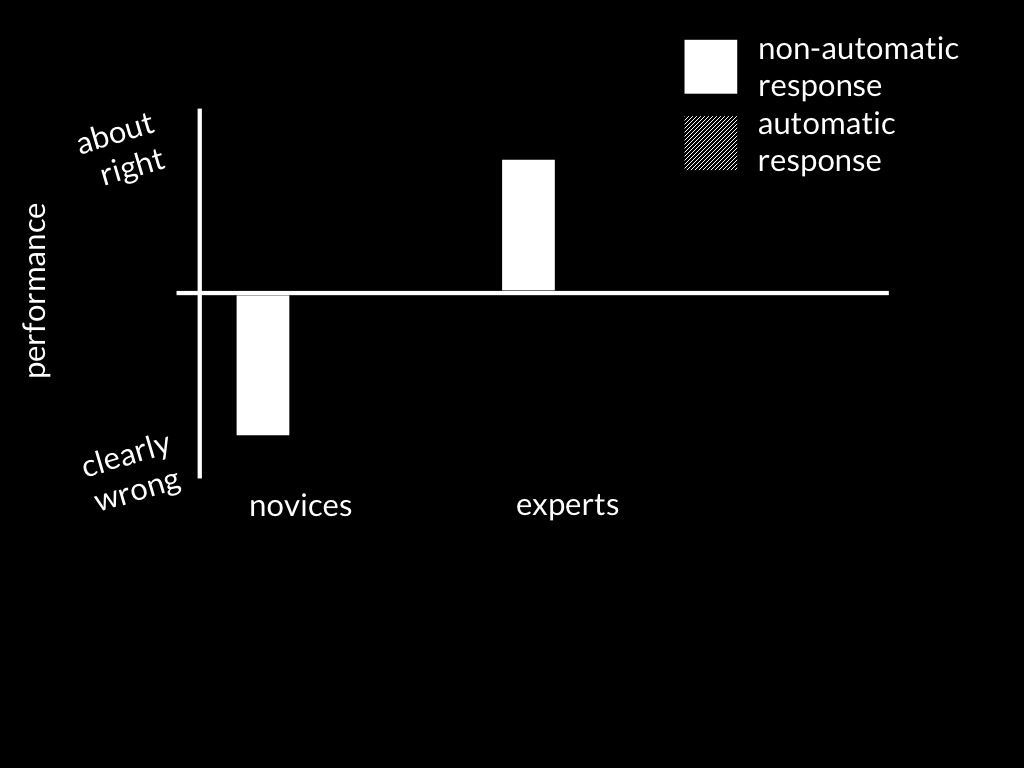

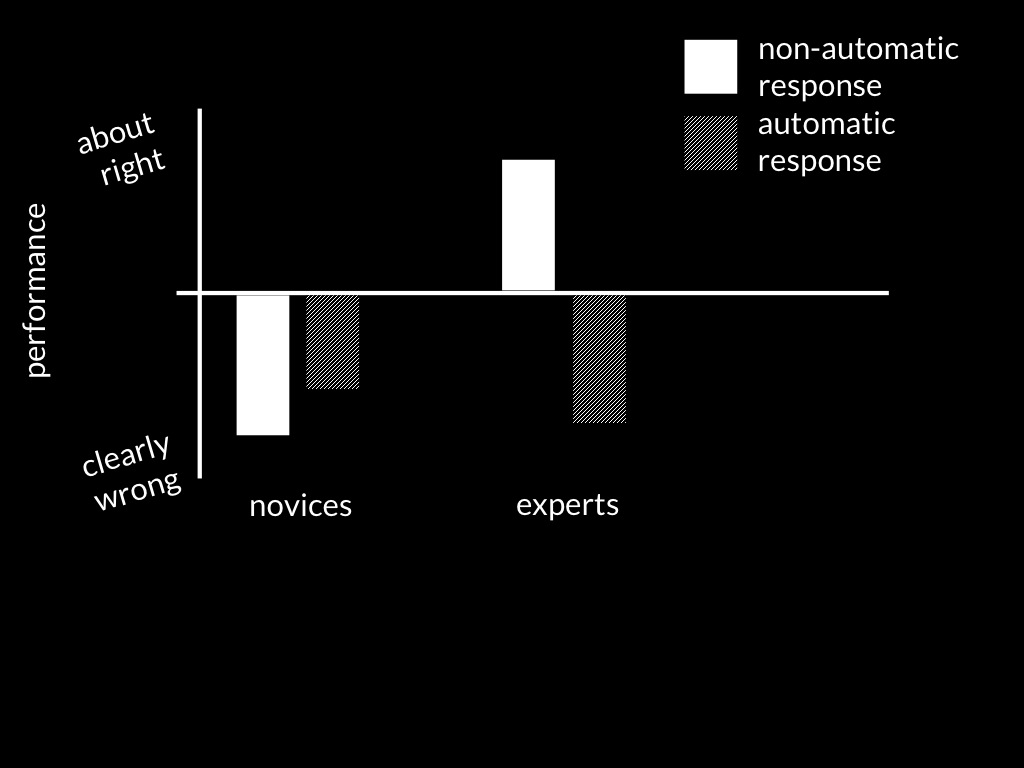

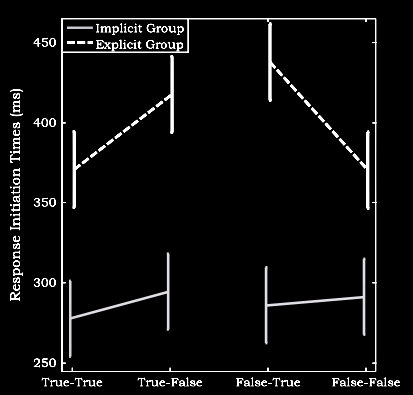

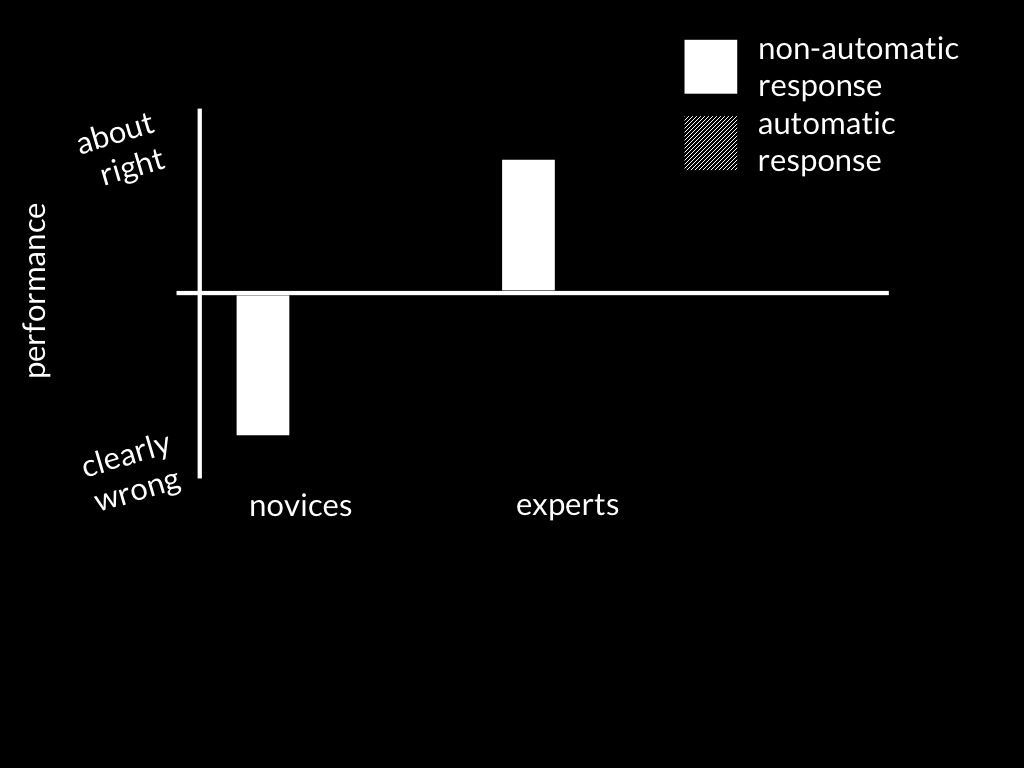

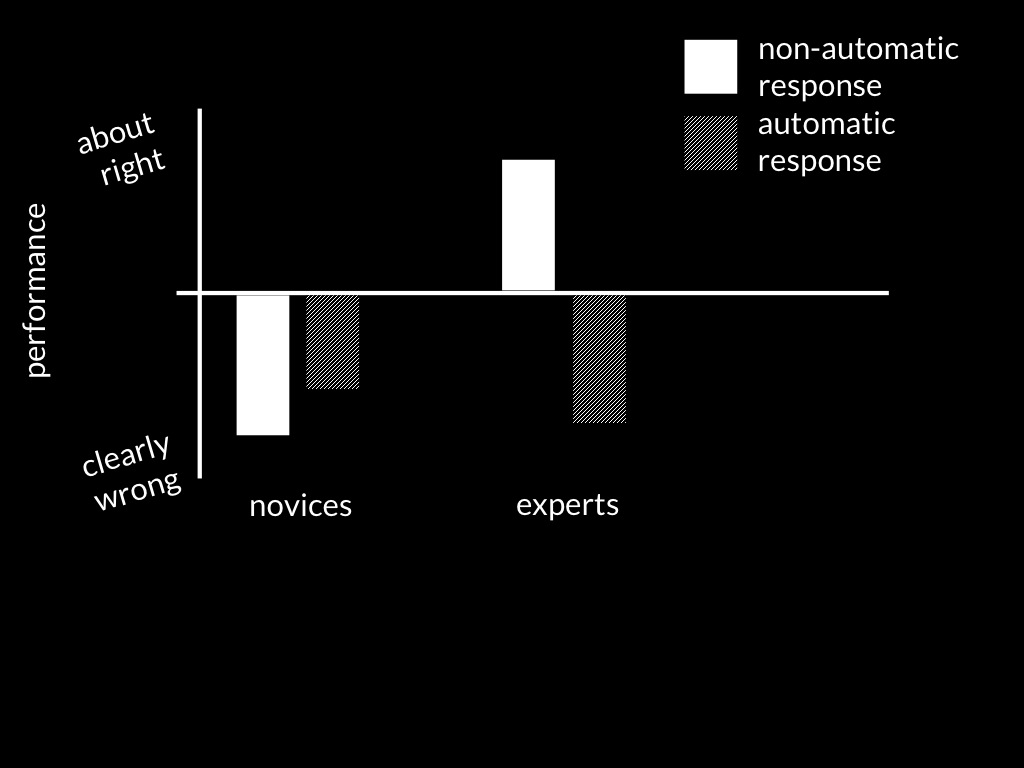

Here is, in a much simplified form, what Kozhenikov and Hegarty

showed. First you see that adults without knowledge of physics

tend to make non-automatic---explicit verbal---predictions

in line with an impetus model, whereas experts make predictions that

conform to a Newtonian model.

But here's the really cool thing ...

[The simplification consists in ignoring the fact that they actually

measured the difference in predictions for small vs large objects;

so strictly speaking what the bars in the figure here represent

are the prediction about the large object’s position minus the

prediction about the small object’s position. Note that (unlike the

mind case), there is no claim about chance performance.]

Irrespective of whether you are a novice or an expert, your

automatic responses (representational momentum) are subject to a

signature limit of impetus mechanics.

This is evidence that those automatic responses rely on such an

impetus model of the physical, as Kozhevnikov and Hegarty argue.

So in the case of physical cognition, it makes sense to ask,

Which model of the physical does a particular process rely on?

And the answer seems to be that some automatic processes rely

on an impetus model, whereas some non-autoamtic processes rely on

a Newtonian model (at least in experts).

OK, that should be enough to show that the dogma should not be

assumed without argument.

Where someone is a mindreader, that is, is capable of identifying mental states,

we need to understand what model of the mental underpins her abilities.

So any time we have a mindreading process, it is sensible to ask,

What model of the mental does that process rely on?

Is there evidence for the positive claim that the dogma is wrong

and that different mindreading systems in humans rely on different

models of the mental?

I think there is.

Let me explain ...

Start with the theories first.

Where do we get theories that might allow us to identify how mindreaders model

the mental?

As in the case of the physical, we're interested in simple theories that only need to be

approximately correct.

Theories are the things that philosophers create when they do things

like trying to explain what an intention is,

(Bratman says he is giving a theory of intention, for example).

Or when they try to explain how belief differs from

supposing, guessing and the rest.

Most of these theories are highly sophisticated and concern propositional attitudes only.

But what about the bad theories, the mental analogues of impetus theories?

Instead of going to the history of science for our bad theories,

we turn to early philosophical attempts to characterise mental states.

My favourite is Jonathan Bennett's.

These theories are hopeless considered as accounts of adult's explicit thinking

about mental states.

But, like impetus theories of the physcial, they provide inspiration for

very simple theories about the mental which make correct predictions of action

in a limited but important range of circumstances.

One attempt to codify a the core part of a theory of the mental analogous to impetus

mechanics is provided in Butterfill and Apperly's paper about how to construct

a minimal theory of mind.

I can't explain it in detail here, but minimal theory of mind is like impetus mechanics.

It's obviously flawed and gets things quite wildly wrong

but still useful in a limited range of circumstances.

Butterfill and Apperly's minimal theory of mind identifies a model of the mental.

I'm not going to describe the construction of minimal theory of mind,

but I've written about it with Ian Apperly and outlined the idea on your

handout.

The construction of minimal theory of mind is an attempt to describe how

mindreading processes could be cognitively efficient enough to be automatic.

It is a demonstration that automatic belief-tracking processes could be mindreading processes.

For this talk, the details don't matter.

What matters is just that it's possible to construct minimal models of the mental

which are powerful enough that using them would enable you to solve some false belief

tasks.

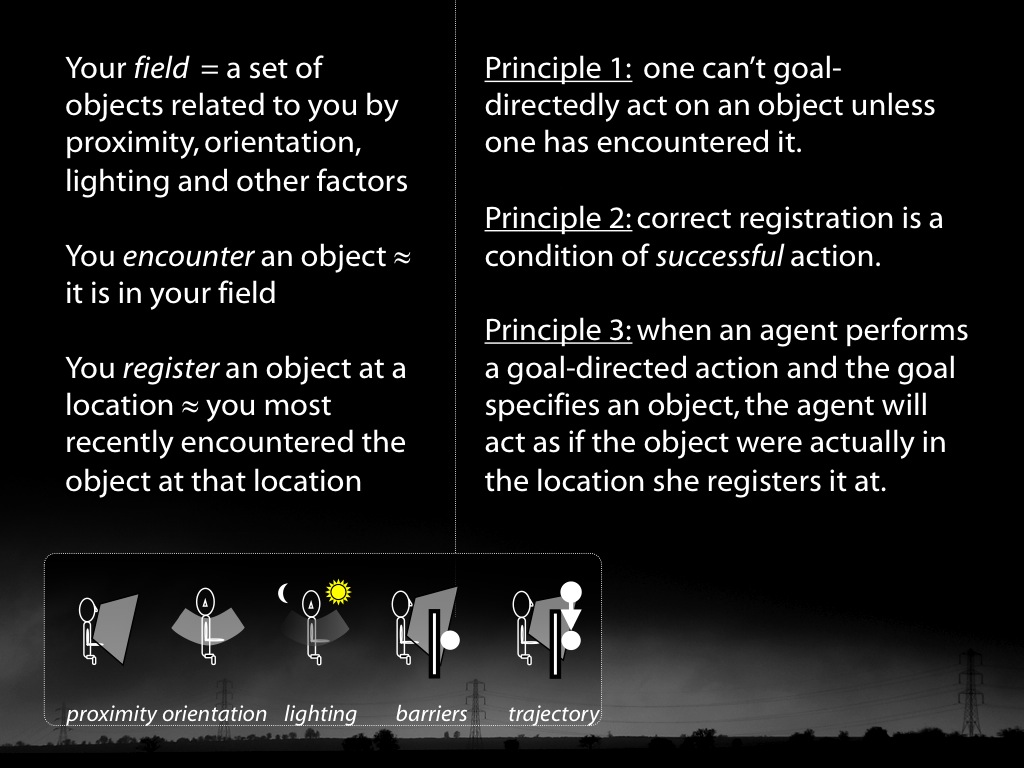

\section{Minimal theory of mind\citep{butterfill_minimal}}

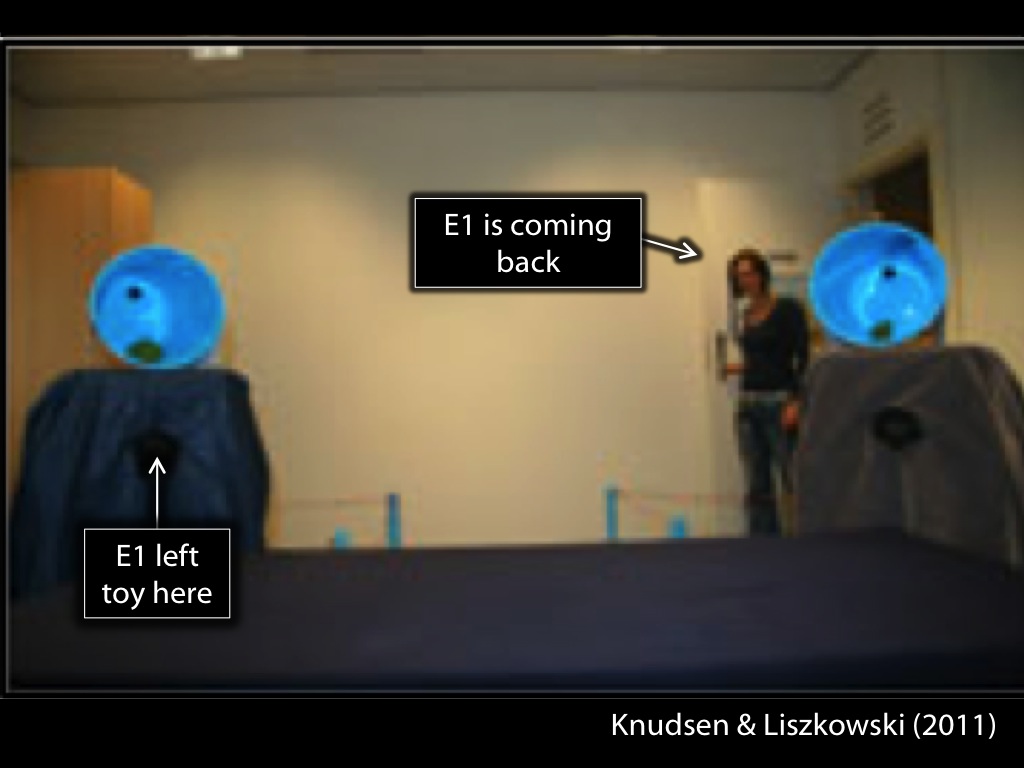

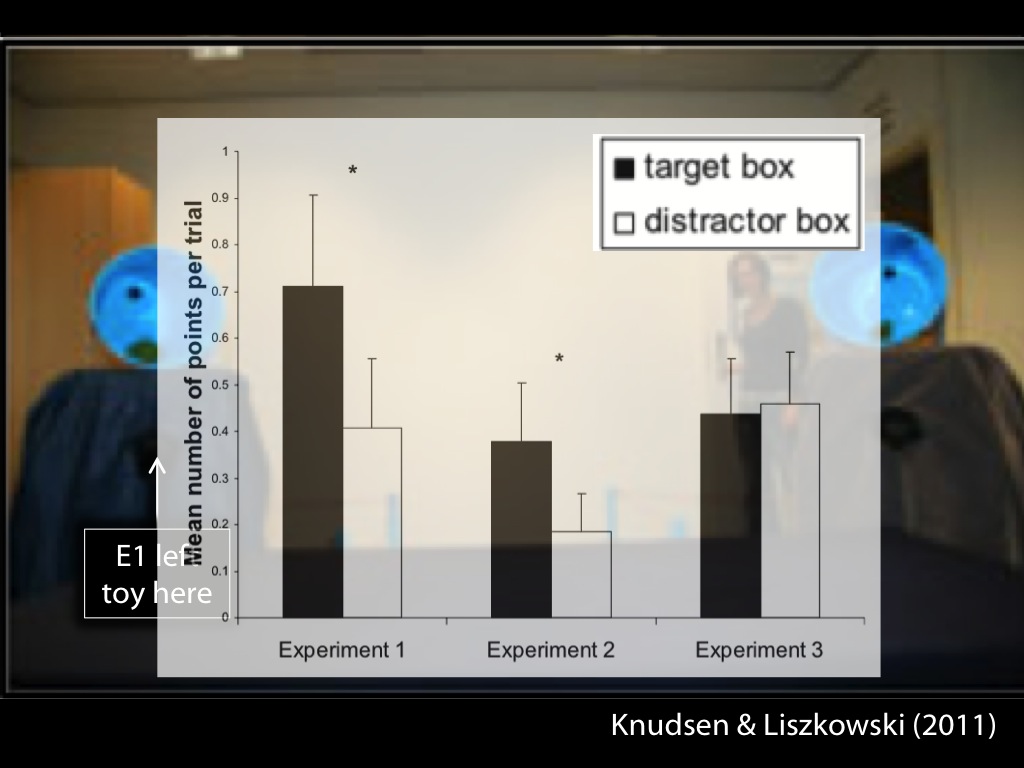

An agent’s \emph{field} is a set of objects related to the agent by proximity, orientation and other factors.

First approximation: an agent \emph{encounters} an object just if it is in her field.

A \emph{goal} is an outcome to which one or more actions are, or might be, directed.

%(Not to be confused with a \emph{goal-state}, which is an intention or other state of an agent linking an action to a particular goal to which it is directed.)

\textbf{Principle 1}: one can’t goal-directedly act on an object unless one has encountered it.

Applications: subordinate chimps retrieve food when a dominant is not informed of its location;\citep{Hare:2001ph} when observed scrub-jays prefer to cache in shady, distant and occluded locations.\citep{Dally:2004xf,Clayton:2007fh}

First approximation: an agent \emph{registers} an object at a location just if she most recently encountered the object at that location.

A registration is \emph{correct} just if the object is at the location it is registered at.

\textbf{Principle 2}: correct registration is a condition of successful action.

Applications: 12-month-olds point to inform depending on their informants’ goals and ignorance;\citep{Liszkowski:2008al} chimps retrieve food when a dominant is misinformed about its location;\citep{Hare:2001ph} scrub-jays observed caching food by a competitor later re-cache in private.\citep{Clayton:2007fh} %,Emery:2007ze

\textbf{Principle 3}: when an agent performs a goal-directed action and the goal specifies an object, the agent will act as if the object were actually in the location she registers it at.

Applications: some false belief tasks \citep{Onishi:2005hm,Southgate:2007js,Buttelmann:2009gy}

Unlike the full-blown model, a minimal model

distinguishes attitudes by relatively simple functional roles,

and instead of using propositions or other complex abstract objects for

distinguishing among the contents of mental states,

it uses things like locations, shapes and colours which can be held in mind

using some kind of quality space or feature map.

Let me put it another way.

The canonical model of the mental is used for a wide range of things:

its roles are not limited to predicton; instead it also supports

explanation, regulation and story telling. In this way it's more like

a myth-making framework than a scientific one, although this is rarely

recognised.

A minimal model of the mental gets efficiency by being suitable only

for prediction and retrodiction.

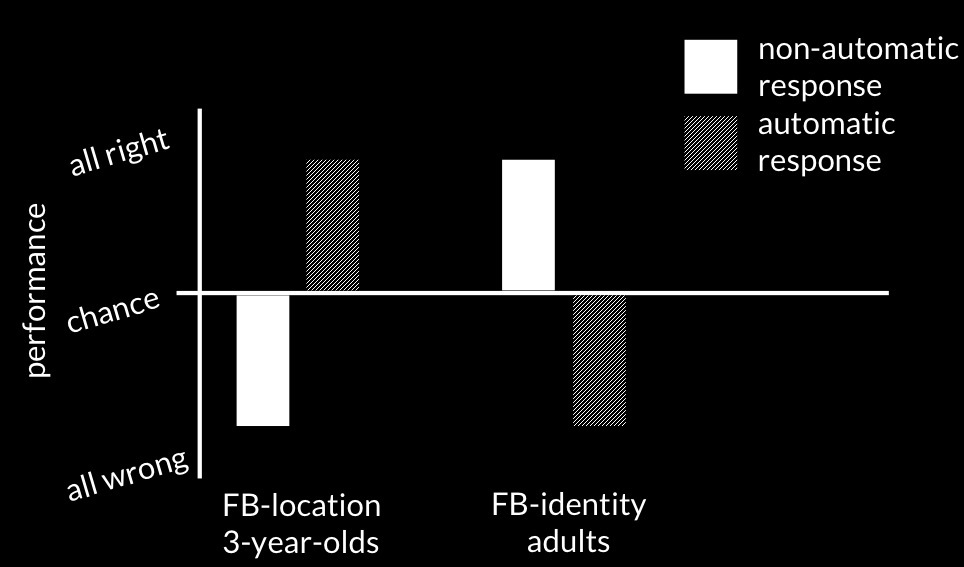

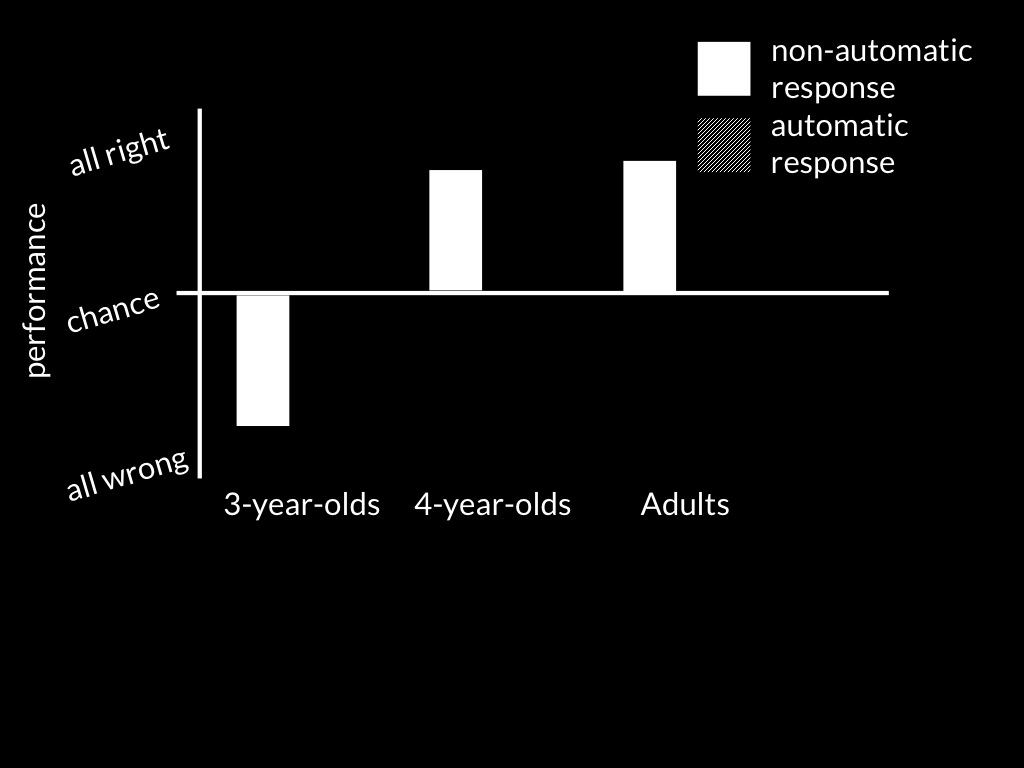

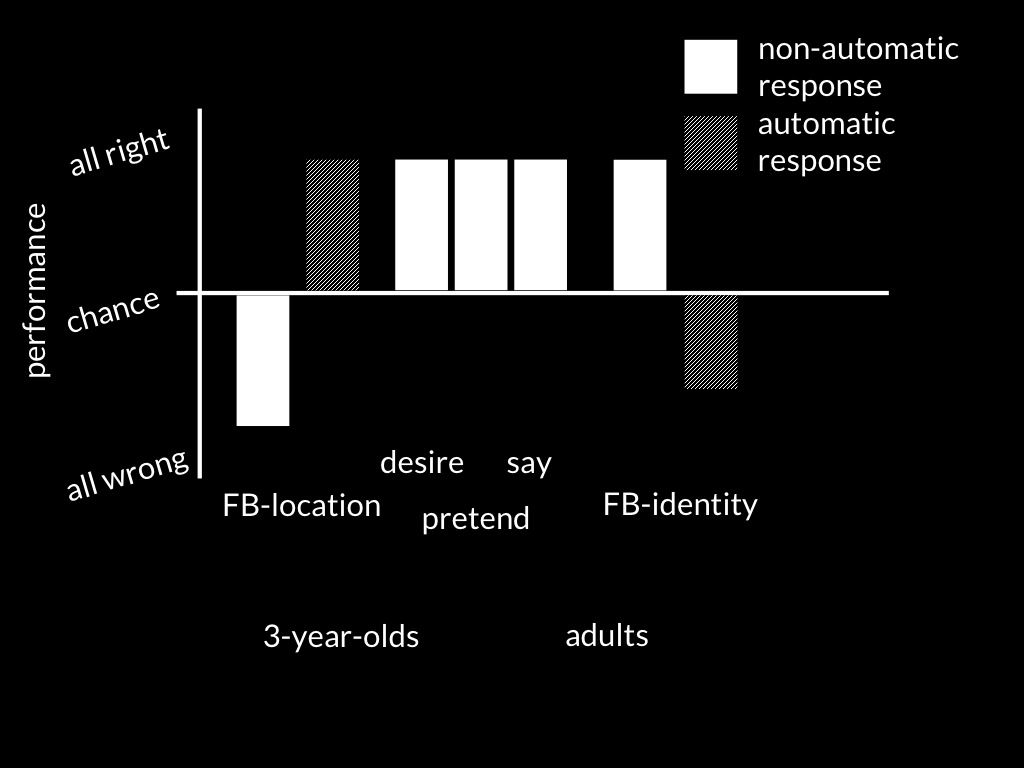

What about signature limits?

One signature limit on minimal models of the mental concerns

false beliefs about identity.

These are the kind of false belief Lois Lane has when she falsely

believes that Superman and Clark Kent are different people:

for the world to be as she believes it to be, there would have to be

two objects rather than one; her beliefs expand the world.

(This is for illustrating mistakes about identity.)

You might not realise that your bearded drinking pal ‘Ian’ and

the author ‘Apperly’ are one and the same person.

[Explain why minimal models can't cope with false beliefs about identity.]

Now on a cananical model of the mental, false beliefs involving identity

create no special probelm. This is because the (Fregean) proposition that

Superman is flying is distinct from the proposition that Clark Kent is

flying. Different propositions, different beliefs.

By contrast, a minimal model of the mental uses relational attitudes like

registration; this means that someone using a minimal model is using

the objects themselves, not representational proxies for them, to keep

track of different beliefs.

Consequently knowing that Superman is Clark Kent prevents a minimal

mindreader from tracking Lois’ false beliefs about identity.

This is why false beliefs about identity are a signature limit of

minimal models of the mental.

‘an impetus heuristic could yield an approximately correct (and adequate) solution ... but would require less effort or fewer resources than would prediction based on a correct understanding of physical principles.’

Hubbard (2014, p. 640)

\citet[p.\ 450]{kozhevnikov:2001_impetus}:

‘To extrapolate objects’ motion on the basis of physical

principles, one should have assessed and evaluated the presence and magnitude of such

imperceptible forces as friction and air resistance operating in the real world. This would

require a time-consuming analysis that is not always possible. In order to have a survival

advantage, the process of extrapolation should be fast and effortless, without much conscious

deliberation. Impetus theory allows us to extrapolate objects’ motion quickly and without large

demands on attentional resources.’

\citep[p.\ 640]{hubbard:2013_launching}:

‘prediction based on an impetus heuristic could yield an approximately

correct (and adequate) solution [...] but would require less effort or fewer

resources than would prediction based on a correct understanding of physical principles.’

‘To extrapolate objects’ motion on the basis of physical

principles, one should have assessed and evaluated the presence and magnitude of such

imperceptible forces as friction and air resistance operating in the real world. This would

require a time-consuming analysis ...

... the process of extrapolation should be fast and effortless, without much conscious

deliberation.

Impetus theory allows us to extrapolate objects’ motion quickly and without large

demands on attentional resources.’

Kozhevnikov and Hegarty (2001, p. 450)

Kozhevnikov & Hegarty (2001, figure 1)

simplified from Kozhevnikov & Hegarty (2001)

simplified from Kozhevnikov & Hegarty (2001)